While the LLM business value proposition is there, along with a ton of hype, most available examples are oversimplified. Finding the current age of Leonardo DiCaprio's girlfriend, using an AI Agent tool to find the weather in San Francisco or sending a funny joke to Slack don't require enriching the data with your proprietary, behind the firewall, data and services.

Some questions we hear often are:

- How do businesses harness the power of the LLMs and all the related toolsets effectively and efficiently?

- How do I maintain the ability to iterate and improve quickly?

- How do I move it all to a highly available production environment and not break the bank?

- How do I do this all in real-time using my data?

Various techniques have/are emerging including prompt engineering, RAG (Retrieval Augmented Generation), LLM Chains, AI Agents, Agent Tools, Multi-Agent Collaboration, etc.

In this article, we are going to focus on AI Agents and how they can be used to produce a business outcome leveraging LLMs, planning and building your AI Agent and most importantly how to make them more effective using your business data, apps, etc. that are behind the firewall. So let's get started!

AI Agents can accelerate all types of business outcomes:

- AI Agents can be used in the exploratory/research phase to determine if there is path/sequence to the outcome.

- AI Agents can be built to drive an outcome and deployed as agent API(s).

- Multiple AI Agents can collaborate across business outcomes.

Here are a few ways business can use AI Agents:

- Helping business operations and employees by automating/optimizing repetitive tasks

- Handle complex tasks autonomously leveraging integrations across data, apps and tools.

- Assist human teams improving productivity by providing a conversation interface for time intensive activities, (analyzing data, taking action in one or systems and generation and distributing notifications, etc.)

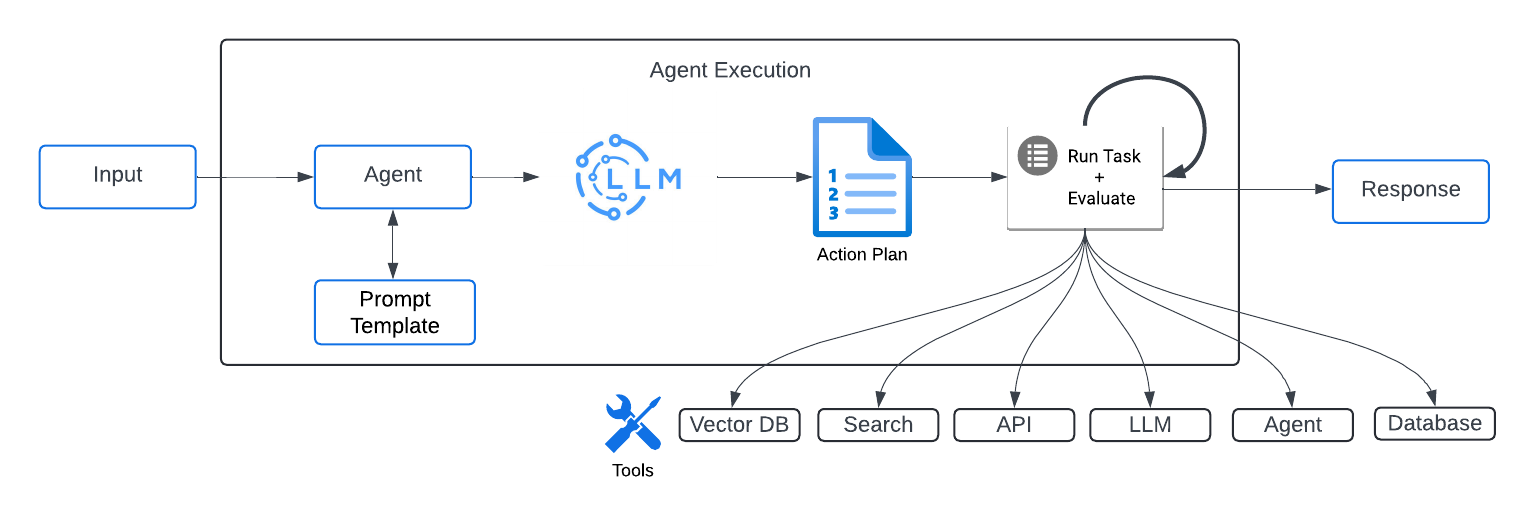

The DNA of an AI agent is the user input, an LLM to develop an action plan, execution of the plan using tools, services and other agents and finally the output.

Now that we understand what AI Agents are, how they can be used and the value they can deliver, how do you start building and using AI Agents?

The most common approach is to learn the ropes by starting small and locally using the AI community resources:

- Cloud based LLM models available via APIs.

- Open AI

- Google Vertex AI

- Bedrock AI

- Anthropic

- Claude

- Mistral

- Opensource AI Agent code frameworks (Langchain, Llama Index, etc.)

- Prompt templates repositories.

- Example Notebooks.

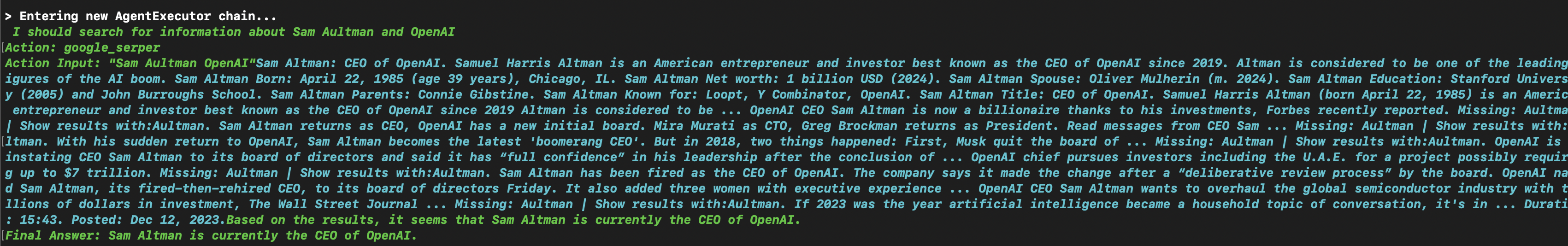

In each of the examples below, a simple agent is built and run to determine where a business contact is currently working. The AI Agents work reasonably well for a famous name like Sam Aultman but do not work nearly as well on common or less distinguishable names like me (Trent McDaniel). In the second run of the AI Agent the answer is I have worked at Epiphany for 10 years even though I have worked for Quickpath since 2005.

While the Google Search API is good at looking up general information and ensuring each search result is consistent (e.g.: Weather in San Francisco), we will see in the next section AI Agents provide more business value when paired with tools that provide more precise data.

import langchain

import os

from langchain.agents import AgentExecutor, create_react_agent, load_tools

from langchain_openai import OpenAI

from langchain import hub

# Setup env variables for Serper and OpenAI API keys

# Select and connect to LLM

model = OpenAI()

# Load tools, all agents require at least one tool. In this example, we are using Google Search API

tools = load_tools(["google-serper"], llm=model)

# Download the prompt from Langchain

prompt = hub.pull("hwchase17/react")

# Initialize the agent

agent = create_react_agent(model, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, return_intermediate_steps=True, verbose=True) # Run the agent for Sam Aultman

response = agent_executor.invoke({"input": "What company does Sam Aultman currently work that may currently work or previously worked at OpenAI?"})

# Run the agent Trent McDaniel

response = agent_executor.invoke({"input": "What company does Trent McDaniel currently work that may currently work or previously worked at Epiphany?"})

- RAG - Retrieval Augmented Generation which a fancy term for feeding the foundation models additional context that the model was not available in the foundation model training dataset. This technique is often used for business data behind the firewall.

- Creating purpose fit AI Agent Tools/Custom functions providing access proprietary data behind the firewall

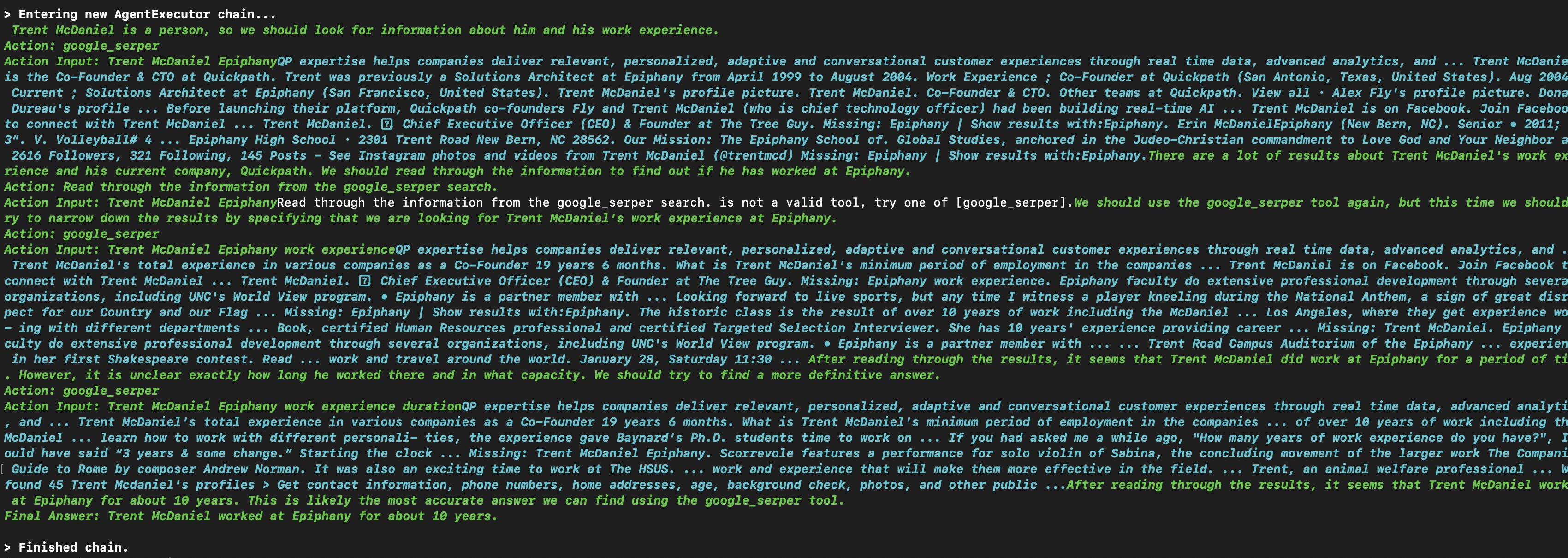

In the next example, we are going to start enrichinging the AI Agent tools with data that is not available through a Google search. The data could be proprietary business data and/or provided through paid services.

By adding services that provide data behind the firewall, accuracy and relevance exponentially improve and so does the usefulness of the AI Agent. Tuning AI Agents requires decomposing the problem, using the correct set of tools (publicly available data and proprietary data) and performance and cost management (number of iterations, token usage, etc.)

import langchain

import os

import langchain

from langchain.agents import AgentType, AgentExecutor, load_tools, create_react_agent, create_openai_functions_agent, initialize_agent, create_openai_tools_agent

from langchain_openai import OpenAI

from langchain_openai import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool

from langchain.pydantic_v1 import BaseModel, Field

from langchain_community.utilities import GoogleSerperAPIWrapper

import requests

from langchain import hub

# Setup env variables for Serper and OpenAI API keys

# Define tool inputs

class contactInput(BaseModel):

first_name: str = Field(description="contact first name")

last_name: str = Field(description="contact last name")

company_name: str = Field(description="contact current or previous company name")

class companyInput(BaseModel):

company_name: str = Field(description="contact current or previous company name")

# Define your methods based on internal or external services (API, Database, etc.)

def getCurrentCompanyAndPosition(first_name: str, last_name: str, company_name: str):

"""Get the current company and position for a first name and last name"""

req_obj = {"first_name":first_name,"last_name":last_name,"company_name":company_name}

headers = {"API-Key": api_key}

response = requests.post("https://demo/api/service/getContactCurrentPosition/ ",

json=req_obj, headers=headers).json()

return_dict = {}

return_dict.update({"first_name":response['result']['first_name']})

return_dict.update({"last_name":response['result']['last_name']})

return_dict.update({"current_company":response['result']['current_company']})

return return_dict

def getCompanyDomain(company_name: str):

"""Get the domain for a company name"""

req_obj = {"company_name":company_name}

headers = {"API-Key": api_key}

response = requests.post("https://demo.quickpath.com/api/service/getCompanyDomain/design",

json=req_obj, headers=headers).json()

return_dict = {}

return_dict.update({"company_name":response['result']['company_name']})

return_dict.update({"company_domain":response['result']['company_domain']})

return return_dict

# Create structured tools from methods providing a description so the LLM know how and when to use.

getCurrentCompanyAndPosition = StructuredTool.from_function(

func=getCurrentCompanyAndPosition,

name="getCurrentCompanyAndPosition",

description="Get the current company and position for a first name and last name. The results are returned in json.",

args_schema=contactInput,

)

getCompanyDomain = StructuredTool.from_function(

func=getCompanyDomain,

name="getCompanyDomain",

description="Get current company domain, for example Microsoft company has Microsoft.com domain) for a contact current company name",

args_schema=companyInput,

)

# Select and connect to LLM

model = ChatOpenAI(temperature=0, model="gpt-4")

# Load tools into a tools array to be passed into the agent.

search = GoogleSerperAPIWrapper()

tools = [

Tool(

name="Intermediate_Answer",

func=search.run,

description="useful for when you need to ask with search"

),

getCompanyDomain,

getCurrentCompanyAndPosition

]

# Define your ChatPromptTemplate. For this example, a existing

# ChatPromptTemplate is being pulled from Langchain hub

prompt = hub.pull("hwchase17/openai-functions-agent")

# Choose the LLM that will drive the agent

llm = ChatOpenAI(model="gpt-4")

# Initialize the agent

agent = create_openai_tools_agent(

tools=tools,

llm=llm,

prompt=prompt

)

# Initalize the agent executor

agent_executor = AgentExecutor(

agent=agent,

tools=tools,

verbose=True,

handle_parsing_errors=True,

return_intermediate_steps=True) # Invoke the agent

response = agent_executor.invoke(

{"input": "Determine current company name and position for Trent McDaniel that currently or previously worked at Epiphany and find the email domain for his current company"})

Now that your AI Agent is built and tested locally, the next step is to deploy and leverage the agent at scale within your business. When deploying an AI Agent as an API so it can be triggered through an event or called by a business application, consider including the following components.

- API security

- Data Privacy

- Agent logging and monitoring

- Capturing feedback

- Test Harnesses

- Change and Release Processes

- Etc.

These components will ensure you can start working on the next AI Agent instead of spending all your time managing the first one.

We have shown some examples and recommendations on how to build, improve and get your AI Agent into action. The video below shows how the Quickpath Platform provides a low code/no code Platform to build, deploy, and manage AI Agents in minutes.